Identify when AI-driven decisions quietly cross from operational convenience into governance risk.

A reflection tool for leaders navigating AI-affected decisions. This assessment is most often used by privately held and sponsor-backed organizations where decision speed, ownership concentration, and AI-driven execution have outpaced formal governance capacity.

This tool focuses on decision-governance readiness, not on technical AI maturity.

How to Use This Self-Assessment

This self-assessment is designed for reflection, not evaluation.

It takes approximately 10–15 minutes

There are no right or wrong answers

Responses are not scored

You do not need to share your answers with anyone

The purpose of this tool is to help leaders and boards consider a single question:

Are AI-affected decisions in our organization being governed at the level of seriousness their consequences actually require?

If some questions feel easy to answer and others feel less clear, that contrast itself is useful.

Section I

Decision Ownership Reality

AI rarely “makes decisions” on its own. But it often changes how decisions are made, who influences them, and who is accountable when outcomes are questioned.

Consider the following: Who actually owns AI-influenced outcomes?

When AI influences an outcome, could two senior leaders/board members independently name who owns the final decision?

Have we explicitly distinguished between AI being advisory versus determinative — or has automation quietly expanded in the name of efficiency and scale?

If an AI-affected outcome were challenged tomorrow, would accountability be obvious—or would it be debated?

Are there situations where responsibility shifts quietly from people to systems without a deliberate decision to do so?

Reflection prompt:

Where responsibility feels assumed rather than explicit, risk tends to accumulate quietly.

Section II

Decision Consequence & Irreversibility

Not all decisions carry the same weight. Some are easy to reverse. Others are not.

Reflect on the following: Which AI decisions are easy to unwind — and which are not?

Do any AI-affected decisions meaningfully impact employment, access, opportunity, or reputation?

Once implemented, would some AI-influenced decisions be difficult or costly to unwind?

Are AI-affected decisions being classified as ‘routine’ primarily to preserve speed and agility, even when their downstream impact would normally warrant additional governance?

Are formality and oversight aligned with the actual consequences of these decisions?

Reflection prompt:

Low-formality processes applied to high-impact outcomes often signal governance misalignment.

Section III

Governance vs. Comfort

Governance exists not to reassure—but to match seriousness with oversight.

Consider: If governance feels easy, it is probably not touching the decisions that matter most.

Are AI-related oversight discussions primarily substantive, or do they function mainly to create comfort?

Has governance evolved in a way that enables safe experimentation and deployment, or has it become primarily defensive and restrictive?

Are review thresholds calibrated to decision risk — or are teams bypassing governance layers to preserve delivery speed?

Would leadership be comfortable explaining how governance works in practice, not just in principle?

Reflection prompt:

Governance that exists only on paper rarely reveals itself—until it fails.

Section IV

Explainability, Trust, and Challenge

Trust depends on the ability to understand, question, and revisit outcomes.

Reflect on the following: When outcomes cannot be meaningfully questioned, trust becomes assumption rather than evidence.

Could a non-technical leader explain how AI influences key outcomes in plain language?

Can AI-affected decisions be meaningfully challenged or reviewed internally?

Is there a clear path for human judgment to intervene when outcomes feel wrong?

Would leadership feel comfortable explaining AI-influenced outcomes publicly if required?

Reflection prompt:

Opacity is often mistaken for sophistication until trust is tested.

Section V

Escalation & Responsibility Thresholds

At some point, decisions stop being purely operational and become legal, fiduciary, or reputational in nature.

Consider: Escalation failure is rarely caused by missing rules — it is caused by unclear ownership at the moment risk becomes real.

Do we know when an AI-related decision stops being a business judgment and requires legal ownership?

Have escalation thresholds been deliberately identified, or are they discovered only after the fact?

Is rapid deployment ever treated as proof of success before governance, risk ownership, and downstream impact are fully evaluated?

Would leadership agree on when external counsel should be engaged—or only in hindsight?

When AI enables faster decision-making, do we deliberately recalibrate governance safeguards — or allow speed to implicitly redefine acceptable risk?

Reflection prompt:

Most escalation failures are not due to ignorance, but timing.

What This Self-Assessment Can—and Cannot—Tell You

This self-assessment does not determine whether your AI use is:

compliant,

ethical,

lawful,

or appropriate.

It is designed to help you assess whether decision seriousness, governance, and accountability are aligned—or quietly drifting apart.

If several questions were difficult to answer clearly, that does not indicate failure. It indicates that decision discipline may not yet match consequence. That realization is often the moment when thoughtful organizations look to ensure risk mitigation before proceeding.

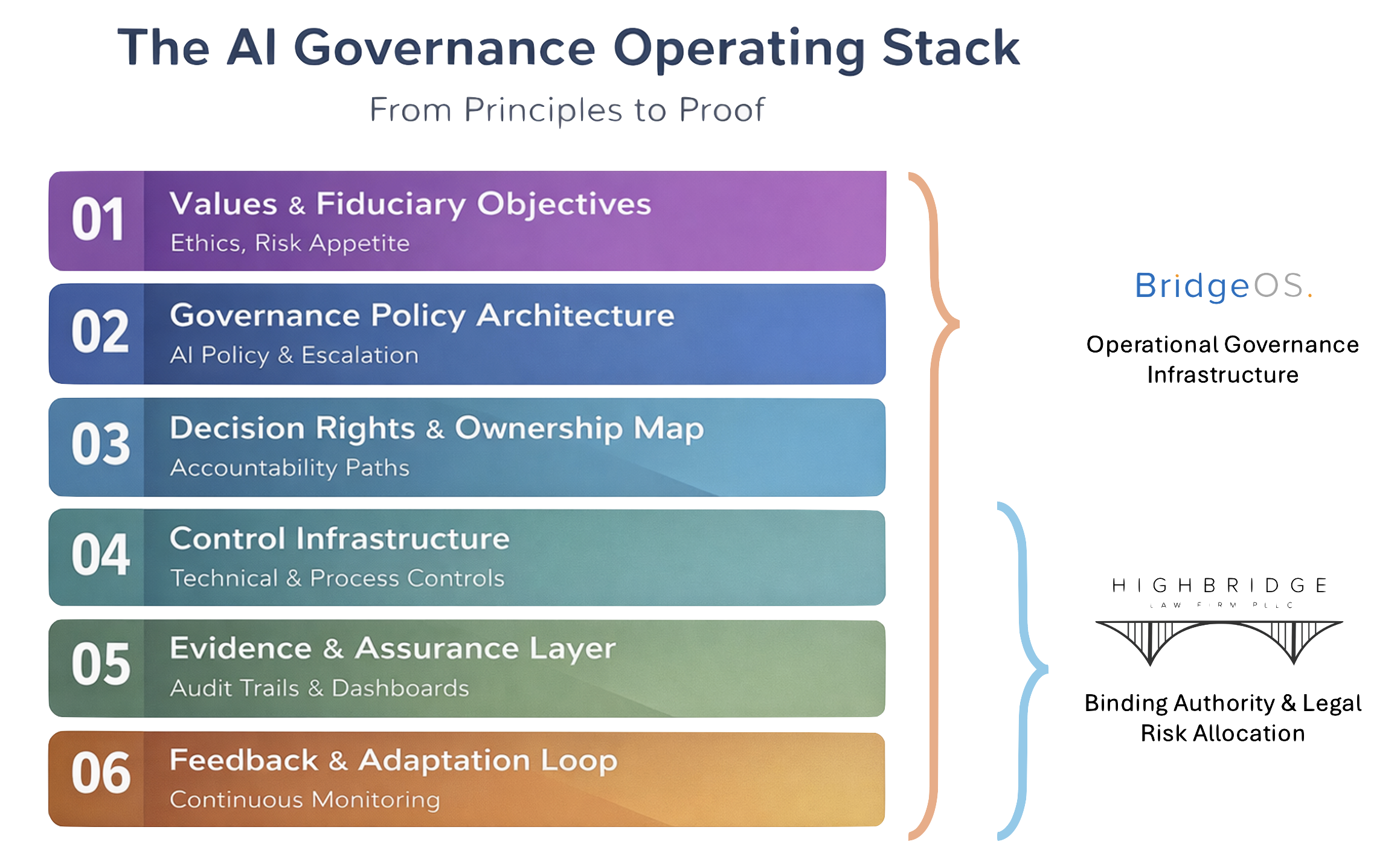

Where BridgeOS Fits

Typical engagements result in a documented decision ownership map, escalation thresholds, and a governance operating blueprint aligned to board oversight.

BridgeOS works with leadership teams and boards at the moment when AI-driven decisions begin carrying governance weight — before formal regulatory, contractual, or fiduciary obligations attach. Before:

policy drafting,

compliance reviews,

vendor selection,

or implementation/escalation.

Before legal obligation attaches

The focus is on clarifying:

decision ownership,

governance alignment,

escalation thresholds,

and responsibility boundaries.

Typical Outcomes

Engagements typically produce:

Decision ownership maps

Escalation thresholds

Governance operating workflows

Board-aligned oversight structures

Where Highbridge Law Fits

When Governance Becomes Legally Binding.

As AI-driven decisions move from internal governance into regulatory exposure, contractual commitments, public accountability, or litigation risk, Highbridge Law supports organizations at the point where governance becomes legally enforceable.

Typically:

policy formalization and board resolutions

risk allocation frameworks

regulatory alignment

contract language and vendor accountability

dispute and liability mitigation

BridgeOS focuses on governance architecture and decision readiness. Highbridge Law activates when legal authority, compliance exposure, or enforceable risk allocation is required.

What’s Next?

If this surfaced governance gaps you weren’t expecting, schedule a short working session here.

Closing Thought

Most Governance Failures Begin Quietly. Organizations rarely get into governance trouble because they move too slowly. They get into trouble because they moved forward without realizing how serious the decision had become.

This short self-assessment is intended to help you recognize that moment—early.